Welcome to Viewtifuls’s git Pages

1. 프로젝트 개요

-

실시간으로 소리를 분석해 지정된 상황을 진동 및 디스플레이로 송출하여 소리를 듣지 못 하는 농아인들이 상황을 인지할 수 있도록 돕는다. 원하는 경우에는 음성을 인식하여 디스플레이에 송출한다.

-

지정된 제스처로 예약문장을 재생하는 등 원활한 의사소통을 돕는 투명 디스플레이를 탑재한 안경형 디바이스 및 소프트웨어를 개발하는 것을 목표로한다. 이 때, 지정된 상황이라 함은 일상 소리와 구별되는 자동차 경적소리, 비정상적인 소음 등 위급 상황을 포함한다. 또한 본인의 이름 혹은 ‘저기’와 같은 지시대명사등의 지정 문장도 포함한다.

-

이러한 지정된 상황을 농아인이 인지할 수 있도록 하여 위급상황을 대비할 수 있게 하고 음성 지시에 대한 적절한 반응을 할 수 있도록 돕는다.

2. 팀 소개

SoundView를 개발하는 Viewtiful은 총 4명의 학부생으로 이루어져 있습니다.

고가을 (팀장)

- email : gaeulgo2@gmail.com

- facebook : https://www.facebook.com/go.gaeul

김예린

- email : ylynnkim@gmail.com

- facebook : https://www.facebook.com/yealynn.kim

류성호

- email : kalim55555@gmail.com

- facebook : https://www.facebook.com/profile.php?id=100002293450549

정승우

- email : tmddnnim2da@gmail.com

- facebook : https://www.facebook.com/seungwoo.jeong.75

3. Abstract

‘SoundView’ has the meaning of ‘eyes before eyes’ and it is smart glasses that are designed to detect the dangerous situation of hearing-impaired people and to communicate their coping intention to the public more quickly and conveniently.

It wears like a pair of glasses, recognizes the surrounding sounds, and displays the recognized contents on a transparent display so that you can immediately react to the sound. It uses a microphone module attached to the glasses to recognize the sound, analyzes the sound from the server, and provides information about the sound through the transparent display.

In addition, the user recognizes the gesture of the user through the camera module, searches the DB based on the analyzed contents, and transmits a corresponding sentence to the speaker, which allows the user to say “Thank you”, “See you again next time” It reproduces the words that the user needs.

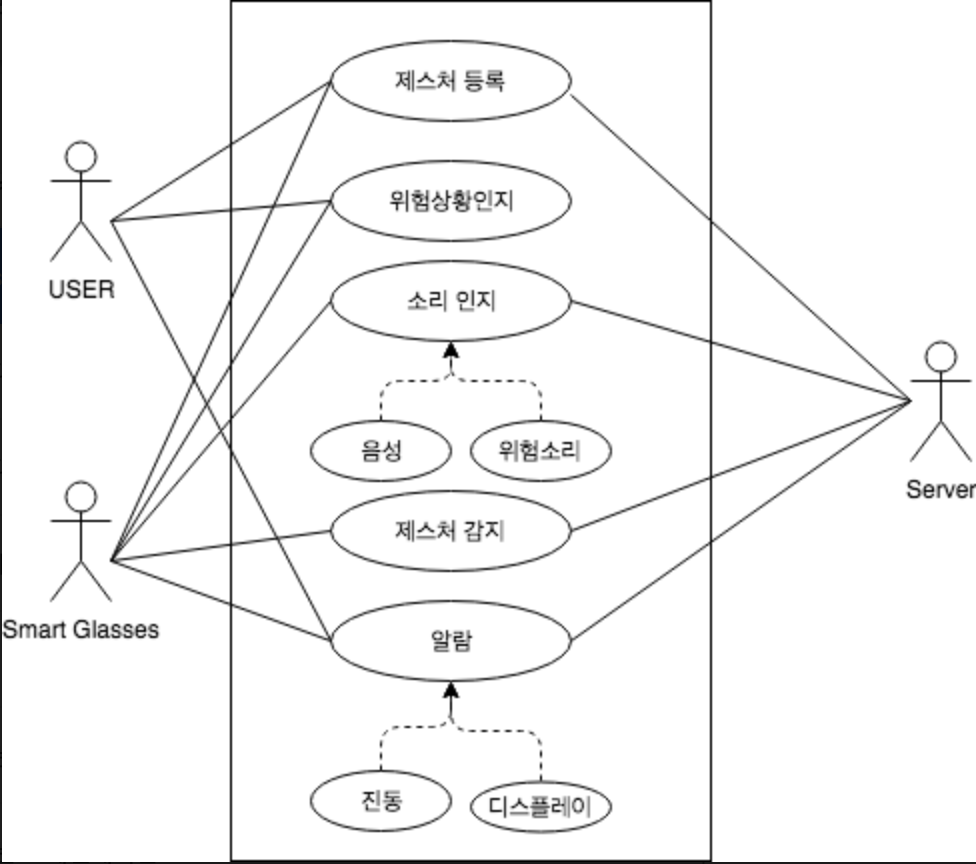

4. UseCase Diagram

5. 소개영상

6. 결과영상

- url :